I’m a bit late to the party, but I just installed RC2 for Anki 24.10 and optimized my deck with FSRS5. My RMSE went down from 2.78% to 1.57% (with the python version optimizer, 1.61% with built-in), but what concerns me is how nearly all of my cards now have a difficulty of 0%, including cards with reviews:

123123232323223333333

122232233331333

and even 12333

My requested retention is 96% but true is 98.9% for my full 189 days of use (I reformed my habits less than 5 months ago and have ignored reviews since 6/14/2024 when optimizing), and I don’t review tons of cards every day. I know that difficulty won’t affect my reviews, but it certainly doesn’t feel like 0% difficulty each time I see a card.

I even tried to bump up my desired retention to 98% and rescheduled cards on change, but it’s still 0% difficulty for most cards and the peak workload I’ll have to do is 41 cards in 80-89 days with the projected forecast.

I also simulated some reviews on a blank new card and answered 1223 and difficulty went from 65% to 36% to 17% to 0%. Interestingly enough, the FSRS Visualizer says it should be 65.38% to 57.46% to 51.71% to 37.56%. Here are my FSRS v5.2.0 optimized parameters: [6.7006, 18.4477, 18.1612, 18.1421, 6.8842, 0.8254, 1.2354, 0.2736, 1.8441, 0.0, 1.3177, 2.0384, 0.0044, 0.3869, 2.4027, 0.3577, 3.2153, 0.8074, 0.937]

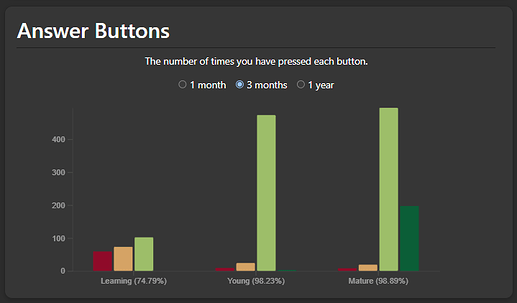

Can I do anything about this? I would love to hear any experts’ thoughts on this and am willing to run tests. I’m not sure if this is a bug or (more likely) it’s just me, but here are some of my stats (after optimization, and, of course, I’m testing with backups):

Before optimization: