If I understand correctly the FSRS algorithm (the wiki on github open-spaced-repetition/fsrs4anki/wiki/The-Algorithm), a card for which I always press “Good” should have the default difficulty as its difficulty rating (D_0(3) = w_4 in the parameters).

However, this is not the case for many cards in my collection. For example, here are my current parameters:

0.1382, 1.9344, 6.5874, 14.9103, 5.1296, 1.1898, 0.7920, 0.1162, 1.6373, 0.0543, 0.9845, 2.1698, 0.0186, 0.3497, 1.5463, 0.1390, 2.8237

In particular, w_4 = 5.1296 which means the default difficulty is 51.296%.

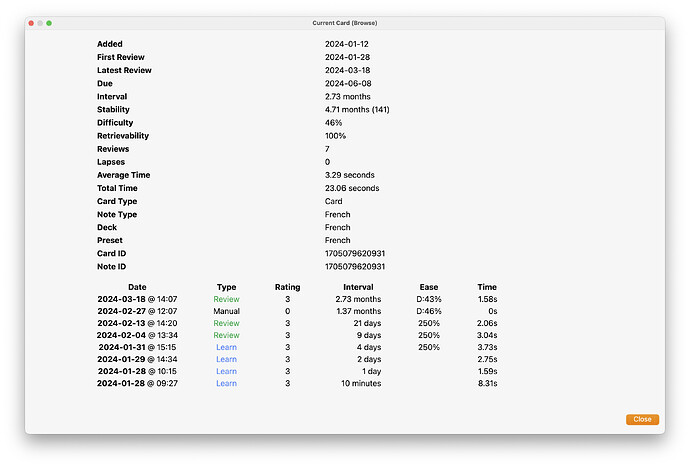

And here is one of my card:

After optimising the parameters today, FSRS assigns a difficulty of 72% to this card! Why is that?