No one needs to understand it. We’ll name it default. No explanation. And maybe it will create the path for a “Custom” sort order too. I mean there will be “Default” and there will be “Custom”.

The further the card is in the backlog, the more this connection is visible and vice versa.

Not necessarily. The card may have more than D only because this is an old card and it has already failed a couple of times.

The earlier the card was entered, the more S it will have. But this may not happen with D.

R: If you have a large Backlog, then the cards that you constantly fail will still be selected first, since R will always be higher than those of those who have been overdue for some time.

S: Does not take into account how long the card is in the backlog.

D: The same disadvantages as S, but it can also work completely differently in different presets. Not always a high D value is bad (old cards) and not always a low D value (young cards) is good.

I hope Relative overdueness is not going anywhere. Useful sorting for filtered decks if you are doing reviews for future dates.

So, turns out PSG cannot maintain desired retention at the specified level.

I don’t think we should continue this. I will tell Dae to just implement reverse relative overdueness.

P.S. I know sorata will disagree and say that we should try more sort orders. I will not be doing that.

I’ve come to realise that it’s necessary for us to look at the distribution of R.

What do you mean?

I think you’re right in that how the cards’ R is distributed matters as much as total_learned. You can have retrieval failures for learned stuff too. But that fail is worse than failing to remember something never learned.

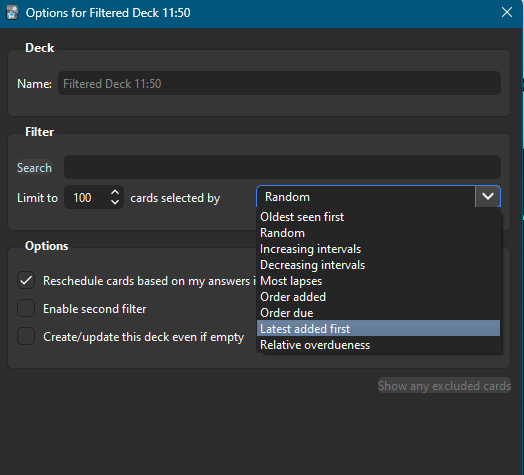

Hey, a bit irrelevant to the discussion but I wanted to point out the missing Sort Orders (e.g. Difficulty Ascending) in Filtered Decks. It would be great to add the new ones there as well

I created a tree of filtered decks that collect the easiest or the most retrievable due cards, more from the high-priority decks than from the low-priority ones. Complex, but it’s to use the Random sort order… and similar cards still appear close to each other because they have similar difficulty.

Would apply Retrievability Descending to cards in their learning phase after their introduction be of much use ![]() I don’t know how their sorting work at this current moment

I don’t know how their sorting work at this current moment

No, if a card is “red”, it’s R is always 100%

Ooohh…so that brings us to the other topic about making R change in real time. This makes more sense now. I suppose R values changing in real time would require a lot of processing power. Is it even doable in the first place, provided that Sherlock find a provisionary model for short-term memory, so that this order could apply to learning cards as well ![]()

The forgetting curve in Card Info shows a “real-time” curve, with increments much smaller than one day. The problem is that the scheduling code can only deal with days (whole numbers).

Perhaps if we could prove that a code change is worth it for overall learning efficacy, maybe it is worth the effort after all ![]() That is if dae agrees, of course.

That is if dae agrees, of course.

Do you mean this ![]() What is the purpose of this

What is the purpose of this ![]()

Good luck convincing Dae

Perhaps if this causes a significant effect during learning, perhaps he may be convinced by it.

But does it really influence learning that much?

That is the question. We dont have a sim. We need a sim.

By the way I think that any improvements in FSRS from now on would be marginal unless something groundbreaking occurs. So I am of the opinion that if there is an improvement that can be made and the resources are available, we should do it.

I did some experimentation in Excel and wanted to share my results. Let’s say that the stability and days elapsed of currently due cards are as follows:

| Stability | Elapsed Days | R | PRL |

|---|---|---|---|

| 1 | 2 | 0.825 | 0.05890 |

| 1 | 3 | 0.766 | 0.04785 |

| 1 | 50 | 0.280 | 0.00255 |

| 1 | 100 | 0.202 | 0.00096 |

| 5 | 5 | 0.900 | 0.01663 |

| 5 | 8 | 0.853 | 0.01418 |

| 5 | 50 | 0.547 | 0.00379 |

| 5 | 100 | 0.419 | 0.00172 |

| 50 | 52 | 0.897 | 0.00169 |

| 50 | 100 | 0.825 | 0.00131 |

| 50 | 160 | 0.756 | 0.00101 |

| 100 | 100 | 0.900 | 0.00085 |

| 100 | 150 | 0.860 | 0.00075 |

| 100 | 200 | 0.825 | 0.00066 |

If we sort by descending R, they are shown in the following order:

| Stability | Elapsed Days | R | PRL |

|---|---|---|---|

| 5 | 5 | 0.900 | 0.01663 |

| 100 | 100 | 0.900 | 0.00085 |

| 50 | 52 | 0.897 | 0.00169 |

| 100 | 150 | 0.860 | 0.00075 |

| 5 | 8 | 0.853 | 0.01418 |

| 1 | 2 | 0.825 | 0.05890 |

| 50 | 100 | 0.825 | 0.00131 |

| 100 | 200 | 0.825 | 0.00066 |

| 1 | 3 | 0.766 | 0.04785 |

| 50 | 160 | 0.756 | 0.00101 |

| 5 | 50 | 0.547 | 0.00379 |

| 5 | 100 | 0.419 | 0.00172 |

| 1 | 50 | 0.280 | 0.00255 |

| 1 | 100 | 0.202 | 0.00096 |

@Expertium, here, a card with S = 1 which was due yesterday (elapsed = 2) has a very low priority.

(Please remember that I may have hundreds of cards with S and elapsed days similar to the cards above this particular card.)

Do you really think that this card should have such a low priority? I don’t think so and that’s why I have used ascending R (relative overdueness) for long. Ascending R sort order is problematic if you have some cards that are highly overdue (read the above table from bottom to top), but I usually don’t have such cards so it’s not that problematic for me.

Now, let’s see what happens when we sort by descending PRL.

| Stability | Elapsed Days | R | PRL |

|---|---|---|---|

| 1 | 2 | 0.825 | 0.05890 |

| 1 | 3 | 0.766 | 0.04785 |

| 5 | 5 | 0.900 | 0.01663 |

| 5 | 8 | 0.853 | 0.01418 |

| 5 | 50 | 0.547 | 0.00379 |

| 1 | 50 | 0.280 | 0.00255 |

| 5 | 100 | 0.419 | 0.00172 |

| 50 | 52 | 0.897 | 0.00169 |

| 50 | 100 | 0.825 | 0.00131 |

| 50 | 160 | 0.756 | 0.00101 |

| 1 | 100 | 0.202 | 0.00096 |

| 100 | 100 | 0.900 | 0.00085 |

| 100 | 150 | 0.860 | 0.00075 |

| 100 | 200 | 0.825 | 0.00066 |

Here, the initial part of the queue makes much more sense. However, there are some problems. (1,50) is before (50,52) but I believe that one should review a card with 50-day interval that is just 2 days overdue before a card with 1-day interval that is 49 days overdue. Similarly, (1,100) is before (100,100), which just doesn’t make sense.

So, I believe that none of descending R, ascending R and descending PRL is perfect. We should try to find out something that combines the best properties of each.

Finding a completely new parameter may not be necessary. We can make a rule something like descending PRL but if R < 0.5, then descending R. This example rule is just intended to suggest that we can combine two sort orders into one. I have not tested what results it would produce.

Interesting. I don’t think that switching to a different order for some cards is a good idea though, as it could cause some weird bugs and it makes it harder to analyze how this sort order behaves.

But out of curiosity @L.M.Sherlock I’d like you to simulate vaibhav’s idea of switching between PRL and descending R.

It seems that I have got the perfect sort order (descending PRL + 0.1*R).

| Stability | Elapsed Days | R | PRL | PRL + 0.1 * R |

|---|---|---|---|---|

| 1 | 2 | 0.825 | 0.05890 | 0.1414 |

| 1 | 3 | 0.766 | 0.04785 | 0.1245 |

| 5 | 5 | 0.900 | 0.01663 | 0.1066 |

| 5 | 8 | 0.853 | 0.01418 | 0.0995 |

| 50 | 52 | 0.897 | 0.00169 | 0.0913 |

| 100 | 100 | 0.900 | 0.00085 | 0.0909 |

| 100 | 150 | 0.860 | 0.00075 | 0.0868 |

| 50 | 100 | 0.825 | 0.00131 | 0.0838 |

| 100 | 200 | 0.825 | 0.00066 | 0.0832 |

| 50 | 160 | 0.756 | 0.00101 | 0.0766 |

| 5 | 50 | 0.547 | 0.00379 | 0.0585 |

| 5 | 100 | 0.419 | 0.00172 | 0.0436 |

| 1 | 50 | 0.280 | 0.00255 | 0.0306 |

| 1 | 100 | 0.202 | 0.00096 | 0.0212 |

Experimentation with some more datapoints may be necessary to ensure that this doesn’t behave weirdly.

Based on the results, you can adjust the “0.1” weight or try a different weighing formula to produce the best “score”.

I added some more datapoints. It still looks good to me.

| Stability | Elapsed Days | R | PRL | PRL + 0.1 * R |

|---|---|---|---|---|

| 1 | 2 | 0.825 | 0.05890 | 0.1414 |

| 1 | 3 | 0.766 | 0.04785 | 0.1245 |

| 5 | 5 | 0.900 | 0.01663 | 0.1066 |

| 5 | 8 | 0.853 | 0.01418 | 0.0995 |

| 20 | 20 | 0.900 | 0.00424 | 0.0942 |

| 50 | 52 | 0.897 | 0.00169 | 0.0913 |

| 100 | 100 | 0.900 | 0.00085 | 0.0909 |

| 100 | 150 | 0.860 | 0.00075 | 0.0868 |

| 50 | 100 | 0.825 | 0.00131 | 0.0838 |

| 100 | 200 | 0.825 | 0.00066 | 0.0832 |

| 20 | 50 | 0.794 | 0.00292 | 0.0823 |

| 50 | 160 | 0.756 | 0.00101 | 0.0766 |

| 20 | 100 | 0.678 | 0.00182 | 0.0697 |

| 20 | 150 | 0.602 | 0.00128 | 0.0615 |

| 5 | 50 | 0.547 | 0.00379 | 0.0585 |

| 5 | 100 | 0.419 | 0.00172 | 0.0436 |

| 5 | 150 | 0.353 | 0.00103 | 0.0363 |

| 1 | 50 | 0.280 | 0.00255 | 0.0306 |

| 1 | 100 | 0.202 | 0.00096 | 0.0212 |