Per deck is better if the materials in the decks are very different.

Hm, I find it difficult to assess in practice whether two decks are sufficiently different.

Can we rely on loss after training as an indicator (cf. your explanation here)?

- If it is smaller when the whole collection is optimized, then it is more efficient to use one set of variables parameters for the whole collection.

- If the single deck optimizations return smaller losses after training, then it is more efficient to use a set of parameters for each deck.

… Correct?

Early access to my new paper about spaced repetition algorithm:

Sorry for the pay wall. The open access publishing costs 1750$, which is too expensive to me. I will share some details in a few days.

Hey, been using it for months and I have a question. Before using FSRS, my retention was 90%. I had a drop in cards the first few days (as expected), but over the course of the weeks I’ve been using the addon, the numbers of cards have increased (from an average of 80 to now around 150-180) and so has my retention rate (now 92% for the last month). Why might this be?

Is there a way to adjust the retention rate?

Yes. It’s 90% by default.

How do we adjust it?

Look at the text you pasted in your options

Having some trouble with the optimiser today. A problem with my file perhaps? Never had any issues before.

revlog.csv saved.

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-10-0ae3437af148> in <cell line: 58>()

56 return x

57

---> 58 tqdm.notebook.tqdm.pandas()

59 df = df.groupby('cid', as_index=False, group_keys=False).progress_apply(get_feature)

60 df = df[df['id'] >= time.mktime(datetime.strptime(revlog_start_date, "%Y-%m-%d").timetuple()) * 1000]

AttributeError: module 'tqdm' has no attribute 'notebook'

It is caused by the update of tqdm. I will fix it. Could you report it in the FSRS4Anki repo?

Is there any rationale behind your recommodation of learning steps?

let time flies and see if this FSRS helps.

ps: this line is more “political correct”.

for the other version you may see the message history, oh seems it’s removed now.

It is because FSRS couldn’t get the all reviews during learning steps. The longer the learning steps, the less the FSRS know. Long learning steps will undermine the prediction ability of FSRS.

So if I had a lot of cards in review phase and only a few in (even) long learning phases, it wouldn’t be a big issue, because what the algorithm learns from those mature cards is being applied to all the learning cards as soon as they are out of learning phase…

I have the same problem as the person who was a medical graduate that posted earlier. I have a good number of cards that show up as:

where “hard” time is longer than “good”.

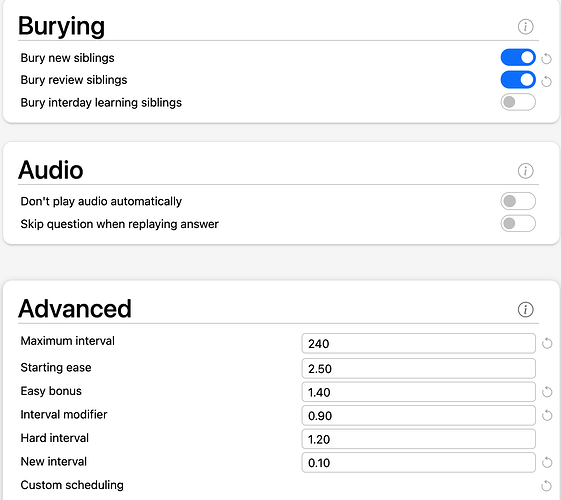

These are my current setting:

Is this normal behavior or is there something wrong with my set up?

That is why I recommend not using learning steps longer than one day. FSRS doesn’t know the interval used in learnings steps.

what do each of the numbers in the parameters mean? I’ve done everything according to your tutorial and optimized it. i had a brand new card that i marked as easy and the next time i saw it the “good” interval would’ve marked it over a month long! is this normal? here are my settings

A month? How did you set the requestRetention?

i didn’t change it. everything default except parameters using optimizee

Please see this wiki for details about the parameters: